Strategies for Safe Perception

What are some practical ways for ensuring the safety of autonomous perception systems?

This is a question often asked by autonomous developers. Some standards groups have been working on this problem for over six years, such as SOTIF / 21448, and still have very little practical solutions to perception safety.

In the past three years we have been working with a growing number of autonomous technology customers, and standards and testing groups, such as IAMTS, and we have helped guide them towards truly safe strategies in autonomous perception.

Product liability concerns relating to autonomous perception are huge. In this blog, we’ll give our readers some valuable concepts that can help them as they develop and validate their own perception safety concepts, in order to fulfill state-of-the-art safety standards such as ISO 26262 and IEC 61508.

1st Concept: Perception isn’t one function

What is “Perception?” It means to tell if something is there, or not. In practice, autonomous developers have to figure out a lot more than simply if something “is there.” They need to know what “it” is, where it is, and they need to know where it is with some degree of accuracy. They need to know how fast it is moving and which direction. And they often need to know something contextual about what nature and intent: is it a pedestrian, a car, a curb, etc.

So, Perception certainly entails a lot, but let’s set all those other considerations aside for a moment. If we boil down Perception to simply mean, “Is something there or not?” then even this very simple definition of Perception is actually made up of three important functions that never get recognized:

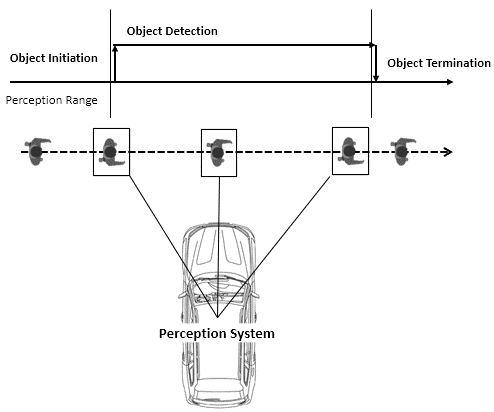

Initiation -> Detection -> Termination

By separating these three distinct functions within Perception, we can begin to solve each one separately, and work them together for a robust safety concept. Here’s a simple illustration of how these three functions comprise Perception:

Perception is actually three functions: Initiation, Detection, and Termination

Why is this helpful? Well, for starters, we know from our experience that this depiction of Perception quickly shortens the time needed for developers to agree to the perception requirements. And we’ll get into why that’s the case.

By untangling “Initiation” performance from “Termination” performance, developers clearly see what they are accountable for and can more easily commit to focusing on these separate aspects. It unloads the Perception team from having to be perfect the whole time, all the time. Under certain conditions, more “Initiations” and less “Terminations” will be expected and required of them. In other conditions, these may be swapped.

This directly helps the Safety team, as well, by untangling the “Initiations” from the “Terminations.” Under certain conditions, there can be safety-critical, must-have requirements for when a Perception system must Initiate an object. If it turns out to be a momentary false positive due to sensor limitations, then it may be Terminated to fulfill quality requirements. Safety vs. quality requirements can be structured for an appropriate balance of both.

“[T]his depiction of Perception quickly shortens the time needed for developers to agree to the perception requirements”

2nd Concept: Perception doesn’t have the final word

Perception will certainly affect the output of the autonomous vehicle, but, if done well, Perception’s job shouldn’t immediately control the output. We can use this to our advantage by recognizing the regions in which the immediate path planning would be affected and where it wouldn’t.

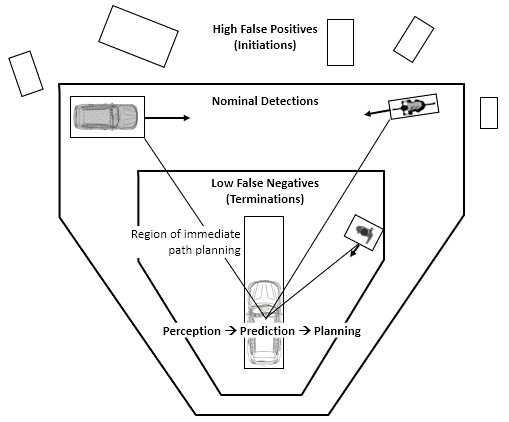

If we understand the basic autonomy architecture to be: Perception -> Prediction -> Planning, then Prediction and Planning can be key enablers for supporting safe Perception. They can understand the performance limitations and requirements that have already been defined and agreed to, and correspondingly Predict and Plan in a way that accept Perception’s limitations.

Going back to the Initiation-Detection-Termination functions in Perception, we can leverage those functions across certain regions that will ensure the immediate path planning region is safe. The following illustration captures just one simple example of how we can leverage performance differences between Initiation, Detection, and Termination, by requiring more Initiations further away, and less Terminations closer to the vehicle’s path:

Prediction and Planning can act as buffers to enable truly safe Perception

Once again, the value in this concept is that developers are able to accept the requirements quickly, compared to requirements that are only focused on safety, or only focused on quality. The competing tradeoff between quality and safety, using this approach, does not place them in an impossible position. Without this approach, there’s a lingering problem of liability hanging over their head if they tune their performance too far one way or the other.

It requires forethought and an understanding of what is feasible for certain technologies, but concepts like these could make Perception performance robust due to their high degree of diverse redundancy, coverage, and strategic biasing of failure modes.

3rd Concept: Perceiving that you can’t see

We defined Perception at the very beginning as being able to tell if something is there or not. We intuitively think of the vehicles detecting common road objects: pedestrians, vehicles, cyclists, and so on. But Perception can also tell if more abstract conditions are present, as well.

For example, let’s imagine you open your eyes and you can’t see the wall or the door in front of you. It doesn’t mean the wall and the door aren’t there. It may simply mean the lights are off and you can’t see the door or wall. If everything you see around you is dark, then you probably conclude that the light is off, and the conditions aren’t good for seeing things that you are pretty sure are there. You may rely on an alternative sensing function, such as using your hands and feet to feel things, and proceed slowly and cautiously to find the door.

The same is true in autonomous Perception. To begin with, let’s think of the simplest example: an occluded space. These are spaces behind a parked car, or around a building, where the autonomous vehicle can’t see what is occupying that space. Because we see visible light with our eyes, occluded spaces are very intuitive problems for us to think about.

But autonomous vehicles have other “eyes” that see more than visible light. Radar, for example, can potentially see through solid objects, but it also has unique limitations that make it difficult to tell if something is there or not, like if there are highly reflective surfaces. Just like physically occluded spaces, these sensor-specific occlusions need to be recognized and treated cautiously.

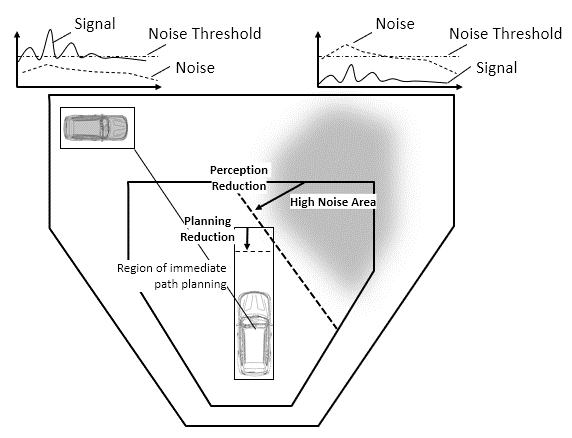

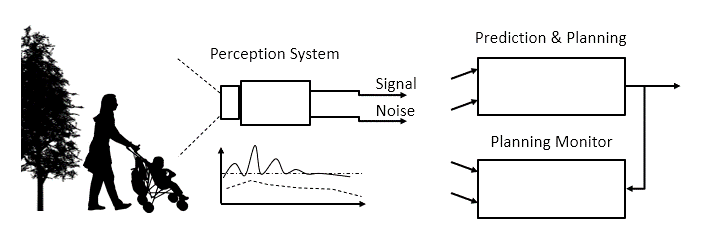

The universal term we’ll use to refer to these virtual “occluded” spaces is noise. Radar, cameras, LiDAR, ultrasonic, etc., all have some noise associated with the raw and filtered signals used to detect if an object is present or not. But the fusion functions, Kalman filters, and physics-models also have noise, as well. The following illustration shows how the concepts of signal and noise can be separated, and independently measured:

“Noise” can be an independent detection by the Perception System

The safety advantage to this is that by measuring noise feedback from all the sensing functions in the Perception System, the vehicle can be programmed to tell when the sensor may be having a difficult time seeing, and still react safely.

When human drivers experience a sudden wave of water or mud on our windshield, we may know there is nothing in front of us, but we will probably slow down, anyway. We make safe adjustments because we can instantly see that we can’t see. Autonomous vehicles have to do the same thing.

Uncertainty signals, particularly in the Kalman filters and fusion functions may be a useful noise signal. But, if possible, the best method is to create the actual environmental conditions, such as dust or metallic clutter, that make it difficult for certain objects to be detected, and then use the same sensors to detect the excessive baseline noise levels in the actual environment. Cameras, radar, and LiDAR can all detect noisy conditions such as low-light, clutter, and dust particles, respectively.

Detecting environmental “noise” supports diversified redundancy

By creating dual sensing channels: one for the primary “detection” signal, the other for the “noise” signal, this safety strategy falls under one or two very common functional safety architectures: Diversified Redundancy and/or Doer-Checker architectures. The argument for safety is that it is highly unlikely for a perception failure to both fail to detect an object and fail to detect the noisy environmental conditions.

We hope the industry can move to a safety consensus in autonomous perception. The reason we want to share these ideas is to create dialogue and eventually implementation of truly safe autonomous perception. Once one or more solutions have been implemented, standardization is soon to follow.

The EGAS standard is a great example of a standardized solution that fulfills another safety standard, ISO 26262. But EGAS is custom tailored to engine control systems. Perhaps something like EGAS can be written for autonomous perception systems, with practical, implementable guidance. Additional standards that focus on a particular technology, such as engines or autonomous perception, do not contradict with the well-trusted safety standards like ISO 26262. In fact, they are very complementary.

Is SOTIF the Solution?

The ISO/FDIS 21448 SOTIF draft standard has tried to solve perception safety, but not by developing the robust safety concepts and all the arguments that ISO 26262 requires. Rather, the very premise of SOTIF is based on the assumption that those safety concepts can’t exist with perception systems, and therefore a new process is needed that does not depend on the upfront requirements that ensure safety.

Instead, risk is evaluated after the design or development phases through extensive testing and statistical analysis, in order to see if it is safe enough. We wrote previously about how safety is never an “intrinsic” property of the whole product, and so these test methods to determine intrinsic properties should never be used to prove safety, as a whole. But the real potential value in SOTIF is understanding the practical risk reductions they recommend if the risk is ever unacceptable.

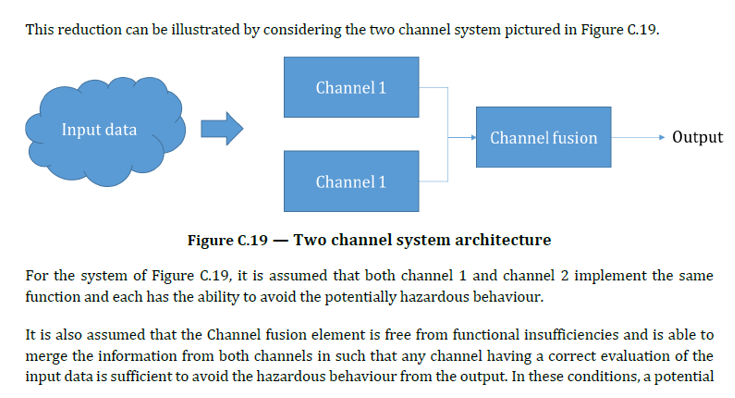

And, unfortunately, this is still an area where much needed practical guidance needs to be given. There are no practical examples of how to ultimately reduce risk in SOTIF, even though the whole idea of SOTIF is to eventually reduce risk. Annex C shows a simple dual channel architecture as a method of a risk reduction. If developers are following well-trusted safety standards, such as ISO 26262, they are probably already very familiar with these universal concepts.

ISO/DIS 21448 Annex C shows a redundancy concept identical to ISO 26262 – Part 5 Annex G

By following diagnostic coverage, redundancy, and ensuring freedom from interference, it appears the SOTIF requirements would be fulfilled by following ISO 26262. Furthermore, by defining that the risk reduction requirements be written upfront, ISO 26262 greatly lowers the cost of rework and lowers the risk of something accidentally going unnoticed until it’s too late and something bad happens. If SOTIF is adopted as a published standard, it can’t be ruled out that accidents could happen if SOTIF is setup, as it currently is, to look for potential risks after the design and development phases.

What we have been advising our clients from the very beginning is to do the tough job of writing the requirements. And continue to re-write the requirements until they both fulfill safety, at the top, and they achieve commitment from the developers going down. It requires many iterations of refining and constantly publishing safety requirements throughout the product development lifecycle. But a cohesive and complete rationale for proving that a certain sensor is safe is really highly valuable, and is not worth cutting any corners in order to appear to move fast.

The real risk of liability and of the potential to harm to any person is simply not worth cutting any corners at all, or rushing things in order to appease investors or launch before competitors. In fact, investors have an inherent interest in making sure there are no fatal flaws – I mean that literally – in their products, and should work together with competitors to leverage resources and talent to clarify solutions in this area.

The main goal with this article is to give our reader’s confidence that, in the end, the hard work pays off. There are many more possible safety concepts than these few ideas we’ve shared here, but however you achieve safe perception, it’s worth it. And in the interest of making a safer and more productive world, we would encourage all developers to share their own, high-level ideas for how we can all support safe perception.

We hope you found this article interesting and helpful. Please share this article, or leave us a note below if this was unclear or incorrect in anyway. Thank you for your support in making safe autonomous vehicles. We look forward to sharing more valuable information with you in the future. You can reach out to us anytime on our website or shoot us an email at connect@retrospectav.com.